No matter how brilliant your idea seems at the brainstorming stage, you don’t really know if it works until you put it in front of your audience.

This is where creative testing comes in.

Platforms are noisier than ever, attention spans are getting shorter by the day, and paid social teams are under pressure to produce results with half the time, budget, and internal resources they had just two years ago.

What’s changed isn’t the need for better creative, it’s the need for a repeatable, testable system that turns raw content into ROI.

And yet, most brands still approach UGC and influencer content like they did in 2019: produce a few videos, run a campaign, and hope something sticks. There’s no real visibility into why one version worked and another didn’t.

This guide will show you how to build a testing system that goes beyond surface-level tweaks. We’ll show you how to use UGC and influencer content as flexible, high-performing assets, and how to structure tests that actually teach you something. You’ll learn how to form clear hypotheses, source modular content, test on a platform-by-platform basis, and, most importantly, turn insights into action.

What is Creative Testing?

Creative testing involves experimenting with different variations of ad content to see which ones perform best. You can tweak hooks, visuals, copy, and other elements to figure out which combination resonates best with your audience.

It gives you the cold, hard data you need to make decisions and improve your ad game. For paid social teams, this often means testing UGC videos with different hooks, different content creators, or even small edits like voiceover vs. no voiceover.

You might be testing:

- Creator A vs. creator B

- Hook-first vs. problem-first intros

- Raw UGC vs. polished edits

- Short-form vs. long-form videos

- Voiceover vs. on-screen text only

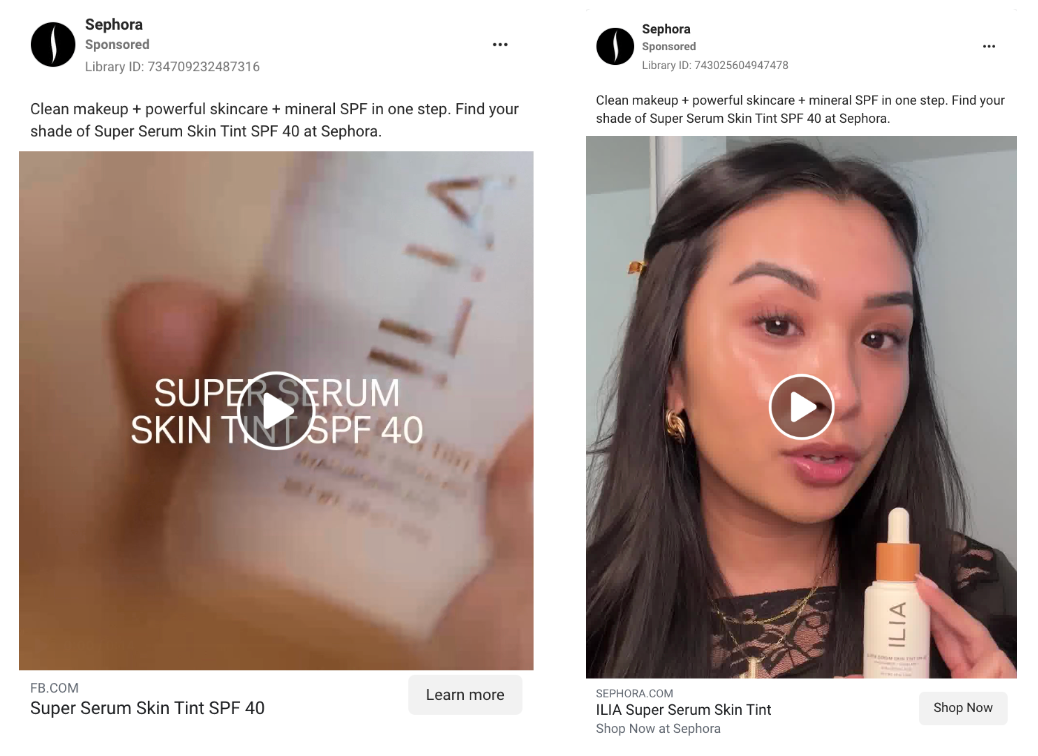

This ad by Sephora uses the same copy with a different ad creative and CTA.

Why Ad Creative Testing Matters

Most teams don’t have the resources to throw spaghetti at the wall and see what sticks. By testing your ad creatives, you can figure out which combination of content gets the best results from your audience.

This is particularly true when it comes to testing user and influencer generated content, a.k.a. content that mirrors how people naturally talk about and use your product. Doing this helps you uncover the messages and formats that actually convert and, once you find a winning formula, you can double down and scale.

Here are just a few of the benefits that testing ad creatives can get you:

- Higher ROI. You get more bang for your buck when you’re consistently putting out ads that actually work. The more you know about what works, the less money you have to pump in to get the results you want.

- Faster learnings. You can quickly identify what works and what doesn’t so your next round of creative is already optimized.

- Better creative briefs. When you know exactly what kind of creatives work, you can write better briefs for creators moving forward.

- Modular content becomes powerful. With multiple variations of hooks, angles, and edits, you can remix content into dozens of high-performing ads.

Building Your Ad Creative Testing Framework

The initial time investment in creative testing can seem like a lot but, over time, it’ll help you build a repeatable system that you can learn from. This eventually leads to an incredibly slick ad process that performs well every time.

The idea is to follow a clear process: form a hypothesis, prep your assets, run tests, and then tweak as necessary.

Let’s break it down.

Form a Clear Hypothesis

Before you touch your ad account, you need to know what you’re testing and why. That means forming a clear hypothesis.

A good creative testing hypothesis looks like this:

“We believe [this creative variable] will outperform [this baseline] because [this rationale].”

For example:

- “We believe that videos with a strong hook in the first 3 seconds will outperform our current product-led ads because they’ll stop the scroll faster.”

- “We believe Creator A will drive higher engagement than Creator B because their content style feels more authentic and matches our audience.”

- “We believe problem-first storytelling will lower CPMs compared to benefit-first messaging because it creates curiosity and tension.”

Notice how each statement is specific, testable, and not just a wild assumption. Your hypothesis should ultimately be an “educated bet” that you can validate with real data. This is because your hypothesis shapes everything else.

It shapes what kind of footage you request from creators, how you structure your edits and ad variants, which performance metrics you track, and how you interpret the results.

Let’s say your hypothesis is about the impact of the hook.

You’ll want to brief creators for multiple hook variations. You’ll test those hooks in isolation to see which performs best, and measure metrics like thumb-stop rate, watch time, and CTR to validate the outcome.

💡 Pro tip: If you’re working with Insense, you can bake these hypotheses right into your creative briefs. Our modular UGC brief templates let you clearly define the types of assets you need to test your assumptions.

Source and Prepare UGC Variants

Now that you’ve formed your hypothesis, you can start gathering the raw materials for testing (a.k.a. your content variants).

Psst… This is where Insense really shines. Our platform was designed to help you source performance-ready, modular UGC at scale.

Before we dive into the process, let’s get clear on what you’re collecting and why it matters.

Modular Content = The Foundation of Creative Testing

Modular UGC is a unique approach to content production where creators deliver multiple versions of specific elements (like multiple hooks or clips) so you can mix, match, and test different combinations across platforms.

When you ask a creator for a video, you’re not really just asking them for “a single video”. You’re asking them for the building blocks that make up the video and how it’s presented to the world.

This might include requesting:

- Multiple hooks that aim to grab attention in the first few seconds.

- Problem/solution statements and different messaging angles.

- Testimonials from real users or influencers.

- CTAs tailored to different stages of the funnel.

- B-roll content or versatile product footage.

- Product shots that highlight different features or use cases.

With Insense’s self-serve platform, you can brief creators to deliver all these elements. Our modular UGC brief template helps you define exactly what you need, down to the number of takes, camera orientation, tone, and platform-specific requirements.

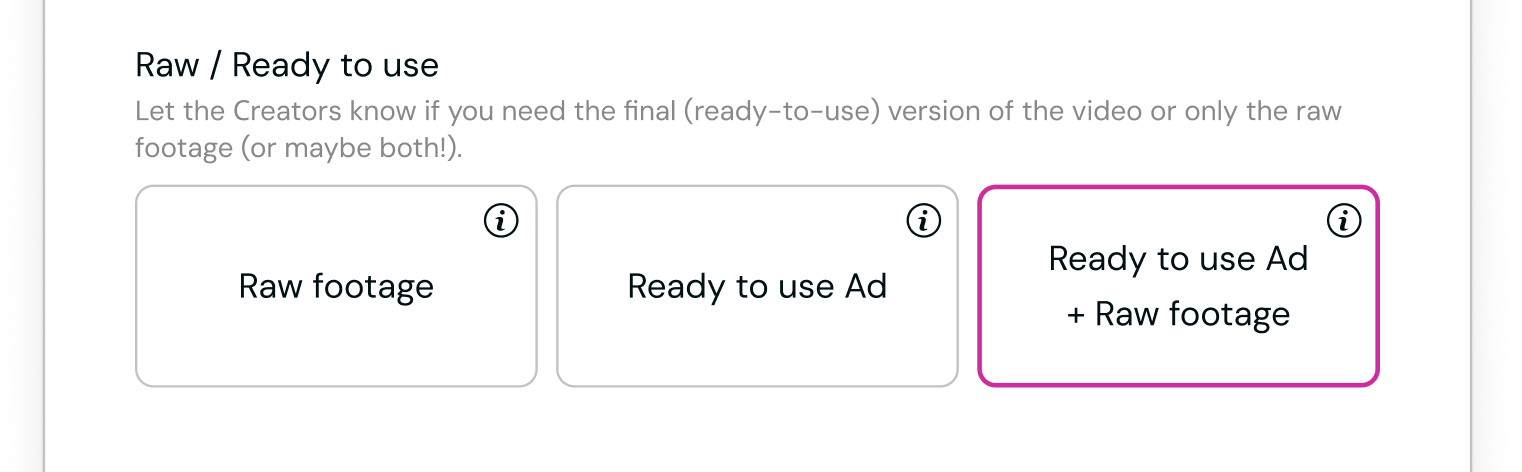

And yes, you can (and should!) request both raw and edited footage. The raw assets give your editors the chance to experiment with different shots, while the edited versions are ready to run if you’re short on time.

Prefer a Hands-Off Approach? We’ve Got You

If you’d rather focus on strategy and leave the content orchestration to someone else, our Managed Services team can take over.

They’ll:

- Write briefs based on your testing hypothesis.

- Scout the right creators for your target audience.

- Direct the shoot for performance-focused content.

- Edit multiple ad variants for A/B or multivariate testing.

- Deliver everything in a ready-to-run format.

Turning Raw Clips into Testing Gold

Once you have your footage (whether you gathered it yourself or had our team help you out), the fun beings. Because modular content isn’t linear (it’s combinable), you can remix assets into dozens of variants, like:

- Hook A + testimonial C + CTA B

- Problem/solution B + hook C + B-roll A

- Same message, but delivered by Creator 1 and Creator 2 for side-by-side testing

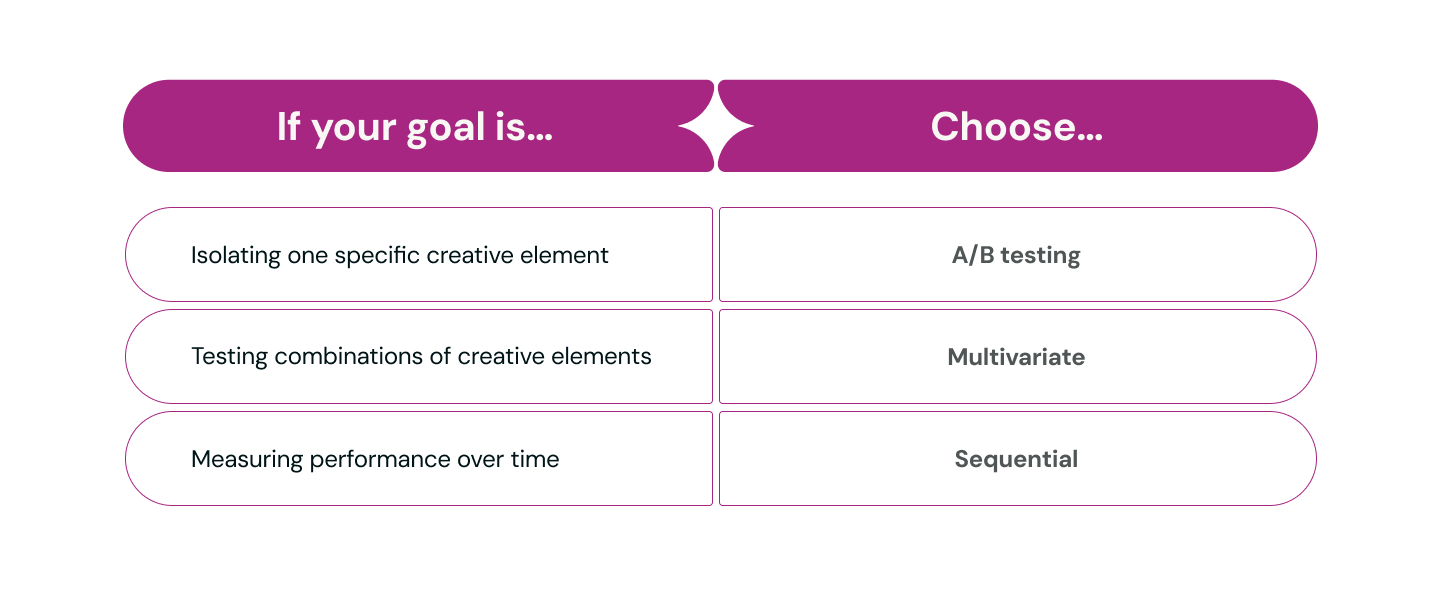

Choose Your Advertising Testing Methods

A/B, multivariate, or sequential based on your goals.

The way you test will directly influence the quality of your insights and the speed at which you can optimize your campaigns.

So, let’s walk through the three most common advertising testing methods, what they’re good for, and how to choose the right one for your specific needs.

A/B Testing

A/B testing pits two variables against each other, one at a time, to isolate impact.

You keep everything else constant and test a single change. That might be the hook, the CTA, or even the creator.

For example, you might have two videos that are identical except for the opening hook:

- Hook A: “Tired of dry skin?”

- Hook B: “This product saved my winter skincare routine.”

With A/B testing, you’d run both versions, monitor their performance, and determine which one is better at grabbing attention.

A/B testing is particularly handy if you’re early in the testing process, have a narrow budget, or are testing high-impact elements.

Multivariate Testing

Multivariate testing involves testing multiple variables simultaneously.

Instead of just Hook A vs. B, you might test Hook A + CTA A vs. Hook B + CTA B vs. Hook A + CTA B… and so on.

Let’s say you have:

- 3 Hooks

- 2 CTAs

- 2 Creators

That gives you 12 possible combinations (3 x 2 x 2). Run all 12 ads, and use performance data to figure out which combination works best.

This method works best when you’ve already identified a few strong content pieces and want to see how they perform together or you’re working with modular content.

Sequential Testing

Sequential testing involves rotating creative over time. You might run one version for a week, then swap in the next. It’s less controlled than A/B or multivariate testing, but it’s still useful when your budget or platform rules make side-by-side testing impractical.

For example, you might run Creator A’s video in week one, then swap in Creator B’s version in week two. You can then compare performance across those periods to see which one performed best.

This method is good if you’re working with a limited budget or small audiences or you want to test ad fatigue or longevity over time.

Here’s a quick cheat sheet:

Set Up Platform‑Specific Advertising Testing

One of the most common pitfalls in creative concept testing is assuming that what works on one platform will translate neatly to another. Spoiler: it doesn’t. Each platform has its own user behaviors, creative trends, and content norms.

Here’s how you can align your testing strategy with the unique dynamics of each platform.

Instagram Creative Testing

Instagram users scroll fast and expect beautiful, well-composed content, but not necessarily glossy brand ads. The sweet spot is UGC that looks native, with thoughtful edits and clear messaging that stops the scroll.

Use Instagram Reels and Story placements to test:

- Branded vs. native-feel edits. A slick brand edit vs. a raw, face-to-camera UGC clip.

- Voiceover vs. on-screen subtitles. Many users scroll with sound off. Test subtitles to improve comprehension and retention.

- Single creator vs. compilation format. Do personal, one-to-one messages work better than multi-voice mashups?

- Product showcase vs. lifestyle storytelling. Try testing product-first ads versus “this is how I use it every day” narratives.

Use Ad Set level testing inside Meta’s Ads Manager to duplicate your ad sets, keeping the audience, budget, and placements consistent, and test only one creative element at a time, like a different hook or visual approach.

Facebook Creative Testing

On Facebook, users are more open to longer-form, value-driven content.

Here’s what to test:

- Problem-first vs. product-first messaging. Starting with a relatable struggle can build curiosity and empathy.

- Value-driven hooks vs. testimonial-led intros. Which angle drives more engagement and trust?

- Text overlays, headlines, and CTA buttons. Facebook gives you space, so use it to guide attention and prompt clicks.

- Video vs. carousel formats. Test which Facebook ad format gets more engagement or drives lower cost per result.

Use Facebook’s Dynamic Creative Testing to mix and match creative components (like images, videos, headlines, and descriptions). It’s particularly useful if you’re already using modular UGC.

To create an A/B test in Ads Manager:

- Go to Ads Manager.

- Click A/B test in the toolbar.

- Next, select either Make a copy of this ad or Select two existing ads.

- Choose the ad from the drop-down menu that you want to set up for the A/B test.

- Click Next.

Insense tip: Meta whitelisting (which you can do with Insense) lets you run ads under a creator’s handle. This adds authenticity and helps your UGC feel less like an ad and more like a trusted recommendation.

TikTok Ad Testing Methods

TikTok thrives on authenticity, humor, and immediacy. You’ll have the best success if your TikTok ads are real, fast, and stop the scroll within the first three seconds (ideally quicker).

Use TikTok to test:

- Hook speed and pacing. The first 1–3 seconds matter. Try shock, humor, or curiosity as your opener.

- Creator energy and tone. Some audiences respond to high-energy delivery; others to a calm, “just chatting” vibe.

- Trending sounds vs. original audio. Test whether your ad performs better with native trends or custom messaging.

- Direct product showcase vs. narrative-style storytelling. Should you go straight to the point or let the story unfold?

TikTok’s Ads Manager lets you run split tests, but it can be clunky. You can always run multiple ad groups manually and control budgets to keep the comparison as simple and as clean as possible.

Here’s how to run a split test on TikTok:

- Go to TikTok Ads Manager.

- Click the Campaign tab.

- Click Create.

- Choose an advertising objective.

- Click the Create a split test toggle.

- Click Continue.

- Complete the settings in the Ad group tab and continue.

- Complete settings under the Ad tab and continue.

- Select a Split test variable in the Split test tab.

- Select a Key metric. The system uses the Key Metric to compare the two ad groups and determine the highest performing ad.

- Set up your Test ad group.

- Click Complete.

Analyze Results & Optimize

See which versions win and update your next batch.

We also have a performance dashboard.

Your creative testing is only as good as the results you take from it, and we’re not just talking about vanity metrics here.

Track things like:

- Views. How many people actually saw the ad?

- Engagement. Likes, comments, shares are signals that your ads resonated with your target audience.

- Click-Through Rates (CTR). Are people taking action? This reveals how compelling your creative really is.

- CPM (Cost Per Mille). How efficiently are you reaching people? A useful indicator of ad quality in the eyes of the platform.

- Spend per Creative. Which assets are soaking up your budget, and are they worth it?

- ROI. The ultimate performance metric. What are you getting back from what you put in?

Don’t view these metrics in isolation. Pair them with the hypotheses you started with. If you tested whether a hook-first approach would outperform a product-first intro, did CTR improve? Did your CPM drop? Did engagement rise?

If the data proves the hypothesis, you’ve found a high-performing direction to scale. If it doesn’t, you’ve still gained valuable information. Now you know what not to double down on.

Your testing loop is only complete once those learnings are applied to the next round of creative.

Inside Insense, you can take your top-performing elements (like specific hooks, creators, CTAs) and plug them directly into new briefs for future campaigns. Our self-serve platform makes this fast and repeatable, and our managed service team can help you translate performance data into strategic direction if you want expert support.

Actionable Tips for Concept & Creative Testing

It’s easy to overcomplicate things when you’re setting up creative tests. But the best testing environments are focused, structured, and easy to learn from.

Here are five tried-and-true tips to help you get the most from your creative testing efforts

1. Limit Variants to 3–5 Options

In theory, testing more variants gives you more data. But in practice, it spreads your budget too thin, dilutes your impressions, and makes it harder to draw meaningful conclusions.

Aim for 3 to 5 creative variants per test.

That’s enough to spot patterns without introducing too many variables. It also helps your campaign reach statistical significance faster so you can act on insights sooner.

E.g., Hook A vs. Hook B vs. Hook C, not Hook A through Hook G with three CTA variations each.

2. Use Real Audience Segments

Always test with audiences that reflect your actual buyer.

It’s tempting to run broad tests for quicker reach, but that can give you skewed data. A video that performs well with a broad interest-based audience might fall flat with your high-value customers.

Instead, test with real, revenue-generating segments, like previous purchasers, high-intent lookalikes, or warm leads from your email list.

3. Run Tests at Peak Engagement Times

Look, if you’re testing creative at 2am on a Tuesday, your results may say more about timing than the creative itself.

Schedule your tests to run during peak engagement hours for your target market. That usually means:

- Weekdays between 9am–1pm or 5pm–8pm (depending on platform and region)

- Weekends for lifestyle or consumer products

- Platform-specific peak times (TikTok often peaks at night, Instagram during lunch)

This gives your creatives a fair shot and helps you avoid mistaking low impressions for poor performance.

4. Keep a Simple Test Log

It’s surprisingly easy to lose track of what you tested, what worked, and why.

Create a simple, centralized test log where you store all your creatives, variants, and results.

Consider adding things like:

- Date launched

- What was tested (hypothesis + creative variations)

- Audience

- Budget

- Results

- Key learnings

This lets your whole team see what’s been tried and stops you running the same tests over and over again. Even better, it gives you a historical view of what’s consistently working—so you can build a creative playbook over time.

5. Test One Element at a Time

If you change three things at once, you won’t know which one made the difference. That’s why it’s critical to test one creative element per variant.

For example:

- Test different hooks, but keep the CTA, creator, and edit style the same.

- Then in the next round, test different CTAs using the same hook.

This methodical approach may feel slow, but it’s the fastest way to learn what’s actually moving the needle.

Your Roadmap to Mastering Creative Testing

If you’ve made it this far, you already know that testing UGC and influencer content needs a repeatable process that helps you identify what actually works.

You don’t need a huge team or an unlimited budget, you just need the right framework, the right creators, and the right tools to pull it all together.

That’s exactly what Insense is built for.

Whether you want to manage creative testing in-house or hand it off to a team of experts, Insense gives you everything you need, from sourcing creators and briefing them for modular content to testing ad variants and tracking performance in one place.

Ready to turn creative testing into your competitive edge? Book a free demo and see how we can help you scale smarter with content that’s built to convert.